Ian Payne 4am - 7am

19 February 2024, 14:09 | Updated: 19 February 2024, 14:13

For many, a standard job interview is daunting enough but now a father has come onto X/Twitter, revealing his ‘horror’ that his daughter will be interviewed by emotion-reading AI.

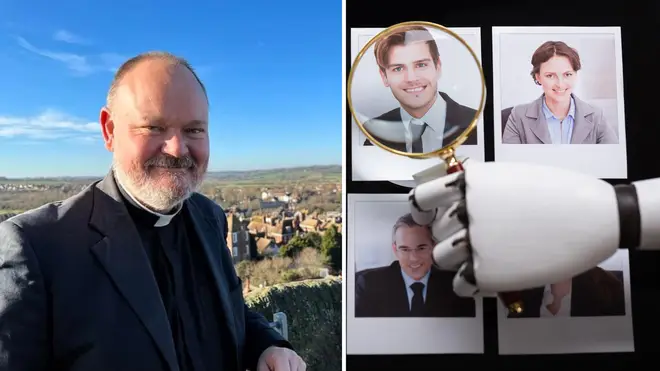

Reverend Paul White came to the social media platform to share his dismay towards how his daughter’s graduate job interview will be conducted.

Whilst Mr White, the Team Rector of Rye Team Ministry, congratulated his daughter for securing an interview, he highlighted the "horrific" nature behind the good news.

On X, the father wrote: "Please share my horror - my daughter has a first interview for a graduate job next week, which is good.

"The horrific part is that she is being interviewed BY AN AI PROGRAM WHICH WILL BE READING THE EMOTIONS ON HER FACE!

"The robots are deciding who gets employed…"

Please share my horror - my daughter has a first interview for a graduate job next week, which is good.

— Fr Paul ✠ (@revpaulwhite) February 18, 2024

The horrific part is that she is being interviewed BY AN AI PROGRAM WHICH WILL BE READING THE EMOTIONS ON HER FACE!

The robots are deciding who gets employed...

The post, which has now been viewed over 200,000 times has gathered over 600 replies, with many just as shocked.

One person asked why his daughter would apply for such a job, writing: "You have my sympathy Fr Paul. I have only one question. Why would your daughter want to work for a company like that?"

To which Mr White replied: "When she applied she didn't know that's how they would interview and, like many coming out of university, she doesn't want to turn things down out of hand…"

A second, third, and fourth user described the AI tool as "disturbing", "terrible" and "ethically totally questionable".

A fifth user sympathised with Paul and his daughter and stated how such interviews limit "different thinkers".

They wrote: "My son has had this. It’s awful.

"Graduate training programmes are interviewing by AI. AI reads CVs, AI interviews, AI decides.

"They’re not going to employ people 'outside the box' - what chance do the different thinkers have?"

Many other people stated how this AI tool would blacklist neurodivergent individuals, specifically those who do not display contextual facial expressions.

The type of emotion reading AI has not been specified.

The AI boom began in 1980 when computers could learn from their mistakes and make independent decisions.

Now, AI tools cover text generation - such as Chat GPT as well as image and video creation from written prompts.

AI is being used for good, such as to save the bee population, aid disabled people, and conserve wildlife.

However, with such an expanding and ever-growing tool, some AI users have used this tool to create inappropriate and highly disturbing pornographic images.

Dan Sexton, the Chief Technical Officer at the Internet Watch Foundation (IWF), told LBC that even though the images are AI-generated, it is not a "completely victimless crime".

He said: "There are real victims being used here.

"Not only do they have to worry about their imagery being distributed on the internet and viewed and shared but now their imagery is being used as a tool to create new imagery of abuse.

"So the whole cycle is problematic in so many places."

A Home Office spokesperson said: "Online child sexual abuse is one of the key challenges of our age, and the rise in AI-generated child sexual abuse material is deeply concerning.

"We are working at pace with partners across the globe to tackle this issue, including the Internet Watch Foundation.

LBC's Andrew Marr has called AI "a clever monkey that we need to be worried about".

Andrew Marr explains why we should be worried about AI

The biggest stars have been targeted by AI-generated images, with one such recent example being pornographic image generations of Taylor Swift, which prompted US senators to introduce a bill to criminalise the spread of non-consensual, sexualised images generated by AI.